[The following is a direct cross-post from my other blog – just so you don’t think I’m doing nothing with my free time, these days.]

[Update 20180923: continues from here]

The objective

I started discussing the coastline shapefile problem in first post.

Early on, I found the tool called QGIS Browser and installed it on my desktop. I used this to examine the shapefiles I was creating.

The first step was to look at the “real Earth” OpenStreetMap-provided shapefiles I was trying to emulate – the two mentioned in my previous post:

/openstreetmap-carto/data/land-polygons-split-3857/land_polygons.shp

and

/openstreetmap-carto/data/simplified-land-polygons-complete-3857/simplified_land_polygons.shp

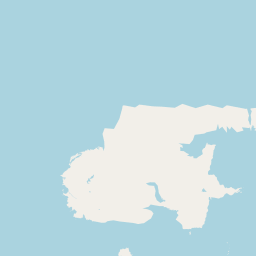

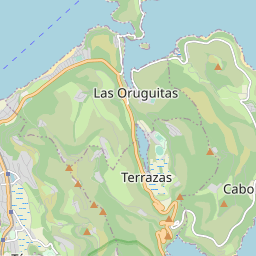

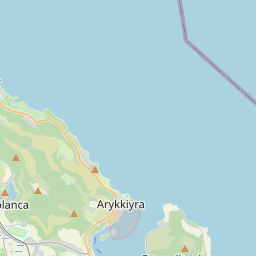

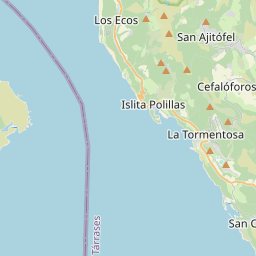

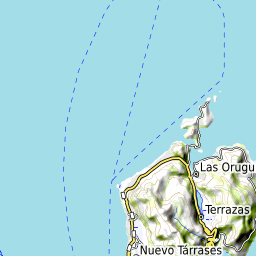

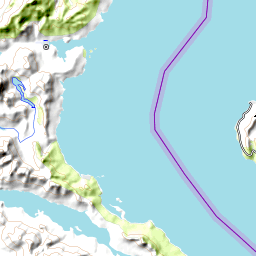

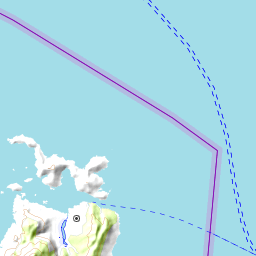

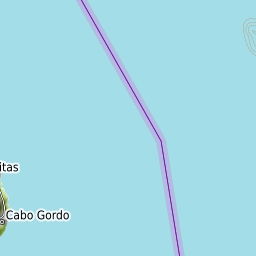

Here are screenshots of each one.

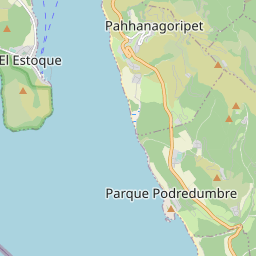

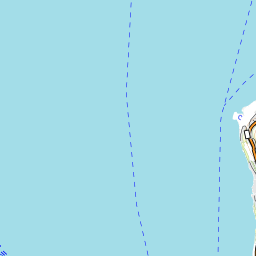

First, land_polygons.shp

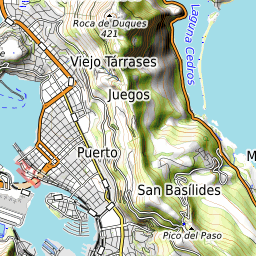

And here is simplified_land_polygons.shp

The structure is pretty straightforward, but – how do I make these? Where do they come from? – aside from the non-useful explanation found most places, which is that “OpenStreetMap generates them from the data”.

The coastline problem

The way that the shapefiles are generated for OpenStreetMap are not well documented. But after looking around, I found a tool on github (a software code-sharing site) developed by one of the OpenStreetMap gods, Jochen Topf. It is called osmcoastline, which seemed to be the right way to proceed. I imagined (though I don’t actually know this) that this is what’s being used on the OpenStreetMap website to generate these shapefiles.

The first thing I had to do was get the osmcoastline tool working, which was not trivial, because apparently a lot of its components and prerequisites are not in their most up-to-date or compatible versions in the Ubuntu default repositories.

So for many of the most important parts, I needed to download each chunk of source code and compile them, one by one. Here is the detailed breakdown (in case someone is trying to find how to do this).

Installing osmcoastline

I followed the instructions on the github site (https://github.com/osmcode/osmcoastline), but had to compile my own version of things more than that site implied was necessary. Note that there are other prerequisites not listed below here, like git, which can be gotten via standard repositories, e.g. using apt-get on Ubuntu. What follows took about a day to figure out, with many false starts and incompatible installs, uninstalls, re-installs, as I figured out which things needed up-to-date versions and which could use the repository versions.

I have a directory called src on my user home directory on my server. So first I went there to do all this work.

cd ~/src

I added these utilities:

sudo apt-get install libboost-program-options-dev libboost-dev libprotobuf-dev protobuf-compiler libosmpbf-dev zlib1g-dev libexpat1-dev cmake libutfcpp-dev zlib1g-dev libgdal1-dev libgeos-dev sqlite3 pandoc

I got the most up-to-date version of libosmium (which did not require compile because it’s just a collections of headers):

git clone https://github.com/osmcode/libosmium.git

Then I had to install protozero (and the repository version seemed incompatible, so I had to go back, uninstall, and compile my own, like this):

Git the files…

git clone https://github.com/mapbox/protozero.git

Then compile it…

cd protozero

mkdir build

cd build

cmake ..

make

ctest

sudo make install

I had to do the same for the osmium toolset:

Git the files…

git clone https://github.com/osmcode/osmium-tool.git

Then compile it…

cd osmium-tool

mkdir build

cd build

cmake ..

make

That takes care of the prerequisites. Installing in the tool itself is the same process, though:

Git the files…

git clone https://github.com/osmcode/osmcoastline.git

Then compile it…

cd osmcoastline

mkdir build

cd build

cmake ..

make

I had to test the osmcoastline tool:

./runtest.sh

Using osmcoastline for OGF data

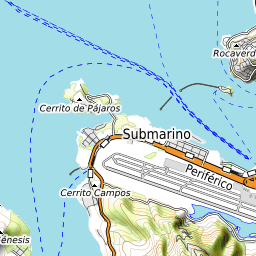

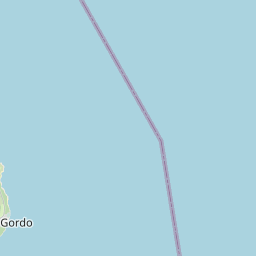

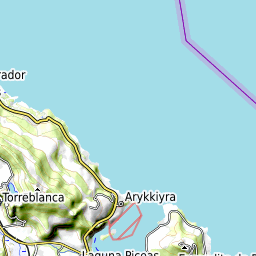

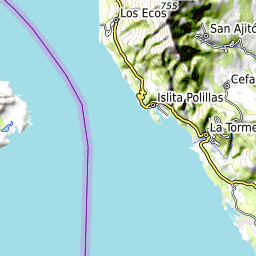

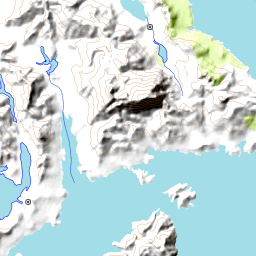

So now I had to try it out. Bear in mind that each command line below took several hours (even days!) of trial and error before I figured out what I was doing. So what you see looks simple, but it took me a long time to figure out. In each case, after making the shapefile, I would copy it over to my desktop and look at it, using the QGIS browser tool. This helped me get an in intuitive, visual feel of what it was I was creating, and helped me understand the processes better. I’ll put in screenshots of the resulting QGIS Browser shapefile preview.

To start out, I decided to use the OGF (OpenGeofiction) planet file. This was because the shapefiles were clearly being successfully generated on the site, but I didn’t have access to them – so it seemed the right level of challenge to try to replicate the process. It took me a few days to figure it out. Here’s what I found.

Just running the osmcoastline tool in what you might call “regular” mode (but with the right projection!) got me a set of files that looked right. Here’s the command line invocation I used:

YOUR-PATH/src/osmcoastline/build/src/osmcoastline --verbose --srs=3857 --overwrite --output-lines --output-polygons=both --output-rings --output-database "YOUR-PATH/data/ogf-coastlines-split.db" "YOUR-PATH/data/ogf-planet.osm.pbf"

Then you turn the mini self-contained database file into a shapefile set using a utility called ogr2ogr (I guess part of osmium?):

ogr2ogr -f "ESRI Shapefile" land_polygons.shp ogf-coastlines-split.db land_polygons

This gives a set of four files

land_polygons.dbf

land_polygons.prj

land_polygons.shp

land_polygons.shx

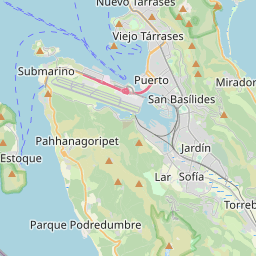

Here is a view of the .shp file in the QGIS Browser. Looks good.

I copied these files into the /openstreetmap-carto/data/land-polygons-split-3857/ directory, and I tried to run renderd. This alone doesn’t show the expected “ghost” of the OGF continenents, though. Clearly the simplified_land_polygons.shp are also needed.

So now I experimented, and finally got something “simplified” by running the following command line invocation (note setting of –max-points=0, which apparently prevents the fractal-like subdivision of complex shapes – technically this is not really “simplified” but the end result seemed to satisfy the osm-carto requirements):

YOUR-PATH/src/osmcoastline/build/src/osmcoastline --verbose --srs=3857 --overwrite --output-lines --output-rings --max-points=0 --output-database "YOUR-PATH/data/ogf-coastlines-unsplit.db" "YOUR-PATH/data/ogf-planet.osm.pbf"

Again, make the database file into shapefiles:

ogr2ogr -f "ESRI Shapefile" simplified_land_polygons.shp ogf-coastlines-unsplit.db land_polygons

This gives another set of four files

simplified_land_polygons.dbf

simplified_land_polygons.prj

simplified_land_polygons.shp

simplified_land_polygons.shx

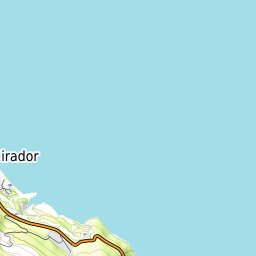

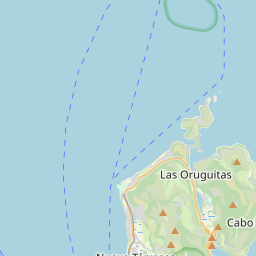

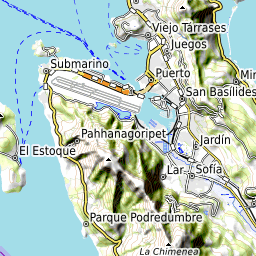

And this .shp looks like this:

Now when I copied these files to the /openstreetmap-carto/data/simplified-land-polygons-complete-3857/ directory, and re-ran renderd, I got a successful ghosting of the continents in the render (no screenshot, sorry, I forgot to take one).

Using osmcoastline for my own data

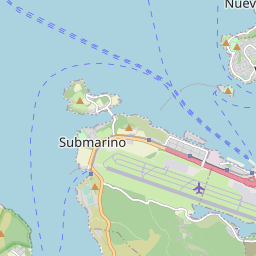

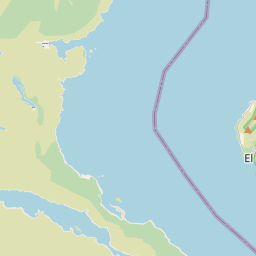

Now I simply repeated the above, in every respect, but substituing my own rahet-planet.osm.pbf file for the ogf-planet.osm.pbf file above. I got the following shapefiles:

land_polygons.shp

simplified_land_polygons.shp

And these, copied to the appropriate osm-carto data directory locations, gives me the beautiful render you see now. [EDIT: Note that the view below of the Rahet planet is “live”, and therefore doesn’t match what shows in the screenshots above. I have moved in a different concept with my planet, and thus I have erased most of the continents and added different ones, and the planet is now called Arhet.]

I actually suspect this way that I did it is not the completely “right” way to do things. My main objective was to give the osm-carto shapefiles it would find satisfactory – it was not to try to reverse-engineer the actual OSM or OGF “coastline” processes.

There may be something kludgey about using the output of the second coastline run in the above two instances as the “simplified” shapefile, and this kludge might break if the Rahet or OGF planet coastlines were more complex, as they are for “Real Earth.” But I’ll save that problem for a future day.

A more immediate shapefile-based project would be to build north and south pole icecaps for Rahet, in parallel with the “Real Earth” Antarctic icesheets that I disabled for the current set-up. You can see where the icecaps belong – they are both sea-basins for the planet Rahet, but they are filled with glacial ice, cf. Antarctica’s probably below-sea-level central basin. And the planet Mahhal (my other planet) will require immense ice caps on both poles, down to about 45° latitude, since the planet is much colder than Earth or Rahet (tropical Mahhal has a climate similar to Alaska or Norway).

Happy mapping.

Music to map by: Café Tacuba, “El Borrego.”